How to turn a regular smartphone camera into a 3-D one

With a few hardware changes, such as a ring of near-infrared LEDs, a Microsoft LifeCam is adapted to work as a depth camera.

Just about everybody carries a camera nowadays by virtue of owning a cell phone, but few of these devices capture the three-dimensional contours of objects like a depth camera can. Depth cameras are quickly gaining prominence for their potential in pocket-sized devices, where the idea is that if our phones capture the contours of everything from street corners to the arrangement of your living room, developers can create applications ranging from better interactive games to helpful guides for the visually impaired.

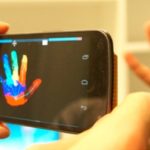

Microsoft researchers say simple hardware changes and machine learning techniques let a regular smartphone camera act as a depth sensor.

Yet while efforts like Google's Project Tango are adding depth cameras into mobile gadgets, new research from Microsoft shows that with some simple modifications and machine-learning techniques an ordinary smartphone camera or webcam can be used as a 3-D depth camera. The idea is to make access to developing 3-D applications easier by lowering the costs and technical barriers to entry for such devices, and to make the 3-D depth cameras themselves much smaller and less power-hungry.

A group led by Sean Ryan Fanello, Cem Keskin, and Shahram Izadi of Microsoft Research is due to present a paper on the work Tuesday at Siggraph, a computer graphics and interaction conference in Vancouver, British Columbia.

To modify the cameras, the group removed the near infrared filter, often used in everyday cameras to block normally unwanted light signals in pictures. Then they added a filter that only allowed infrared light through, along with a ring of several cheap near-infrared LEDs. By doing so, they essentially made each camera act as an infrared camera.

With some modifications, researchers at Microsoft can use regular cameras to map the depth of hands and faces.

"We kind of turned the camera on its head," Izadi notes.

The Microsoft team says it wanted to use the reflective intensity of infrared light as something like a cross between a sonar signal and a torch in a dark room. The light would bounce off the nearby object and return to the sensor with a corresponding intensity. Objects are bright when they're close and dim when they're far away—intuitive to us when it comes to visible light. But the group needed to train the machines (in this case a Samsung Galaxy Nexus smartphone and a Microsoft LifeCam Web camera) on that relationship, so the camera could determine if it was seeing, say, a large hand in the distance, or a small hand up close.

For this project, the researchers decided to focus on just one challenge: modeling human hands and faces, not all kinds of objects and environments. After building up a set of training data, which included images of hands, the group found it could measure a person's motions at a speed of 220 frames per second. In a demonstration the group showed how such tracking could be used to navigate a map, such as by making grasping motions or spreading hands apart, or to play a simple game, such as by virtually slicing a flying banana in the air.

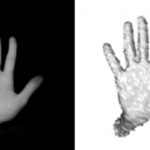

Training hand: A hand captured by a modified camera working as a depth camera (left) is used by Microsoft researchers’ machine-learning algorithm to produce an estimation of its depth (right)..

While the training data focused on faces and hands, the group wasn't actually training the machines to recognize hands or faces, as we'd think of them, but just the properties of the skin's reflection. The huge amount of training data allows the machine to build enough associations with the data points in the pictures that it can then use additional properties of the image to estimate the depth. Microsoft chose skin since it has so many implications for navigating Xbox and Windows environments, but Kohli points out that the machine learning techniques could transfer anywhere.

"The only limitation is what sort of training data that you give it," he says. "The approach in itself can be tailored to work on any other scenario."