Sidecar Pattern in Security

The sidecar pattern shows itself as a very powerful tool in the new world of containers and can be found in several use cases. In this post we focus in analyzing some of the most interesting use cases from an IT security perspective.

What is it?

The sidecar pattern is used to extend and/or improve the functionality of a process (the main application) by running other auxiliary processes in parallel which have almost no coupling among them. Instead of using libraries to implement the functionality we use some processes or containers which have this functionality built-in, this provides us with greater isolation and encapsulation. The sidecar shares, at runtime, the same lifecycle (it starts and stops with the main process) as well as other computing resources (storage, network, ...). It is a distinct component, with its own software lifecycle, that is incorporated by the main application’s team at deployment time.

This pattern already existed before the rise of containers (by using netcat and ssh tunnels, pipes, rotatelog, ...), but its implementation wasn’t as smooth and as complete as with the coming of containers.

Traditionally, the two methods to extend an application were libraries and services, but with the arrival of containers now we have another method. Let see the pros and cons of the different available methods:

- Intra-process (libraries)

+ No latency

- Lack of isolation. Flaws in one component could affect others

- One version for each language is needed

- Dependency management, integration issues, ...

- Services

+ Dependency management

- Latency

- Different runtime lifecycle, Integration issues (interfaces)

- Security, Access Control, ...

- The sidecar pattern

+ Dependency management

+ Not shared, without integration issues

+ Transparency (almost ever), without integration issues

- Resource competition

When to use it?

Sidecar pattern is not a silver bullet, there are several criteria that will help us to decide when is a good idea to choose this pattern and when it use is discouraged:

+ The component is owned by a remote team

+ Main and sidecar containers are both required to run in the same host

+ Main application hasn’t got extension mechanisms

+ Always with a container orchestrator

+ When same runtime lifecycle and individual update are needed at same time

- When latency is a trade-off

- When resources cost is not worth the advantage of isolation

- When application and sidecars have to scale differently

Use Cases

Sidecar pattern allows us to provide our applications with a huge number of different capabilities such as:

- Application management: Sidecar watches the environment for changes and restarts or notifies the main application in order to update its configuration.

- Infrastructure services (credential management, configuration management, DNS, adaptors, security, access control, ...)

- Monitoring (resources, network traffic, ...)

- Protocol adapters

Analysis

After the preliminary analysis a total of three use cases seem to be interesting from a security perspective and we’ll describe all of them below.

Credential management

A sidecar is used to retrieve the credentials needed to access an application’s external service, the credentials are stored in files so the main application can obtain them in order to establish a communication channel. This gives the main application a very simple interface to access credentials (reading a file) which is agnostic of the actual credential provider.

There are two variants; one in which credentials are retrieved on application startup and then the sidecar dies after storing them, and another in which the sidecar continues watching for changes in the credentials. In both cases the two containers share a volume, in the docker host in which they are running, and where the credentials are stored.

From a security point of view, the threat in this scenario is that an unauthorized person could have access to the stored credentials; the attack mechanisms we have are:

- Administrators of the docker host can gain unauthorized access to the credentials.

- Persons authorized to deploy applications can gain unauthorized access to the credentials.

For the analysis we have tested two different products for the main application deployment and its sidecar in order to check all the attack mechanisms:

- docker-compose (and Swarm as they have a very similar behaviour): A project (stack in Swarm) is created with the two containers.

- Kubernetes deployment: Containing a pod with both containers.

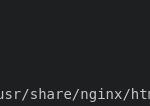

We have used two containers to test how it works. An Nginx container as the main application and an Alpine container as the sidecar which write the secret into a file.

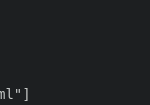

main.dockerfile

sidecar.dockerfile

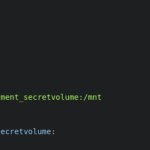

With docker-compose we have used two distinct versions of the file format that allow us to test two different ways of operation with shared volumes, with their cons and pros (see conclusions down below):

compose files

Additionally, a second project is used to simulate a malicious container trying to mount the volume containing the secret.

compose-malicious.yml

With Kubernetes we have used a simple deployment descriptor with the two containers and a shared volume:

kubernetes deployment file

Conclusions

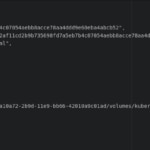

With both solutions we realize that it is possible to get access to the credentials through the docker host filesystem if the user has the right permissions (as would be the case of a platform administrator), it’s only a matter of searching a bit (by using docker inspect) to find the routes in which the files live and get access to their content. In the case of Compose, we have another issue as, by default, removing the project does not remove the volumes that will remain in the docker hosts until a prune command removes them. We must use the -v parameter when removing the project in order to delete the volumes used by the containers and the sensitive information stored on it.

docker inspect Compose

docker inspect Kubernetes

In the second scenario, we can see that Kubernetes give us a better isolation, even inside a namespace it is impossible to access resources between pods, as there is no naming schema in the descriptor that allows this cross access. With docker-compose, when using named volumes, we can declare a volume as external in a malicious project and use the name provided by compose (projectName_volumeName) to get access to it. Until version 3.0 it was possible to use anonymous volumes (imported with volume-from) that avoided this issue as they hadn’t name and were impossible to access them outside the local project.

As we have seen, the use of the sidecar pattern in this case provokes that credentials could be compromised, either by direct access to the docker host filesystem or by deploying a malicious container (when using Compose), which doesn’t make its use advisable.

Alternatives

As alternatives to the use of the sidecar pattern with shared storage, the following options could be proposed:

- Avoid using a sidecar. Exec the container’s process wrapped in a script that retrieves the credentials and make them available to the application using environmental variables. In this way we continue maintaining a simple interface to the application while removing the credential’s revelation risk. This alternative is only valid if the credentials are kept unchanged over time.

startup script

- Use a sidecar exposing a service to retrieve the credentials, in this way the main application access a URL instead of a file. Same case that in the previous option we remove the credential’s revelation risk, as there is no data storage, but in return it forces us to maintain the sidecar container running even if we don’t need to update the credentials.

- Use built-in orchestrator mechanisms that allow us to define secrets that are later exposed to containers into environmental variables or tmpfs files.

- Don’t use credentials in the application … ;-), the safest credential is the not existing one. We’ll see later how to do it...

TLS Proxy (adapter pattern)

The adapter pattern allows us to present to the external consumers a different view of our application. In this case we provide to our consumers an HTTPS interface without the need of modifying our container (nor its configuration), that continues exposing its functionality via HTTP. This deployment pattern gives us many advantages, because key pairs and certificates are not managed by the development team or the CI/CD tooling, instead they are retrieved at startup time. This method is easier for everybody and empowers security teams as they can define every single TLS parameter, manage the revocation and renewal of certificates and have full control over distribution.

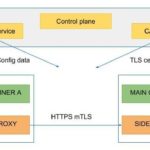

In this use case the sidecar is in charge of retrieving the necessary configuration (key pairs, certificates, TLS configuration, proxy configuration, ...) at startup time and acts as a proxy for incoming connections, that later redirects to the main container once the TLS tunnel is established.

For the study two different options have been tested:

Both products implement a service mesh and allow us to inject a sidecar in our deployment that provides features for network management, security, monitoring, logging ... Istio has TLS management completely integrated, Linkerd has the integration in an experimental phase.

As part of the solution a certificate authority is deployed that will provide the certificates and key pairs. Every time a component is deployed in the service mesh the sidecar connects with the control plane and retrieves all the configuration needed for the proxy. As both containers share the network stack, the proxy manage the incoming connections, once the TLS tunnel is established it forwards the main container's traffic via loopback interface.

With this solution developers deploy their applications exposing only the HTTP port. It is the responsibility of the security team the building and publishing of the sidecar image and the configuration of all the needed parameters, this is done only once, so their tasks are simplified because they don’t need to review/configure every deployment, only the sidecar image. In the event of a vulnerability being detected only one image must be patched and the replacement is almost instantaneous, it is only necessary to restart the sidecar with the new version.

AuthN y AuthZ (ambassador pattern)

The ambassador pattern allows us to provide our application with a different but simpler view of the external services it consumes. Again, by using a sidecar, we allow the application to connect with their external services without explicitly providing credentials. Communication is intercepted by the proxy in the sidecar, which is the one that establishes the communication and authenticates by recovering the credentials and, once the channel is opened, the dataflow between the application and the external service is started.

In this use case this option is only present in Istio (experimental in Linkerd 2.2), and only mTLS is supported. The service mesh’s control plane allows us to configure which services can talk to one another. For this to work an identity is assigned to every service and it will be used to manage AuthN and AuthZ.

After a successful authentication RBAC authorization can be enabled by the definition of the corresponding rules at namespace, service or method level inside the mesh.

The CA service in the mesh provides the key pairs and the certificate of the assigned identity to the sidecar proxy, which uses them in the connection handshake with the peer service. This way access rules can be established to increase security inside the cluster in which the services run by allowing only authorized connections between them.

Currently almost all services (including a lot of DBMS) support AuthN y AuthZ mechanisms based in digital certificates, making this option pretty interesting because simplifies credential management, since now it is not necessary to manage secrets but only identities and therefore backends and service configuration can be done by system administrators without fear of secret revealing.

Istio also supports origin authentication by validating the JWT tokens that incoming requests can hold.