Serverless

Serverless architecture, also known as FaaS (Functions as a Service) enables the execution of an application via ephemeral, stateless containers; these are created at the moment an event is produced that sets off this application. Contrary to what the term suggests, serverless does not mean “without a server.” Rather, these are used as an anonymous element of the infrastructure, supported by the advantages of cloud computing.

Here, leaving aside the hype that accompanies the term serverless, we explore in this post the possibilities it offers in architecture and applications development. We also study the principal alternatives for use in public and private clouds.

The benefits of serverless

The principal advantages of a serverless architecture is the possibility that a developer can forget about the management of the infrastructure over which the service is executed (function) and concentrate on its functionality: the entire cycle of development is simplified.

It also provides us with a high level of decoupling between the different services, favoring the development of architectures based on microservices. This greatly facilitates the development of architectures based on continuous rollouts, in addition to simplifying the rollbacks, were these to be necessary.

Finally, serverless architecture makes it possible to reduce spending on infrastructure, generating costs only when a request is made, and as a result, the function is executed.

Serverless architecture

A function that is executed in a serverless architecture should be processed by a wrapper of the appropriate language (Python, NodeJS, etc) that is capable of receiving the entry data, deliver it to the function, execute it and send the exit data. Each generic wrapper of a determined language is called an environment. These environments are instantiated in the form of a container when a function has to be executed.

When a developer charges or modifies a function of the FaaS infrastructure, a container is created that contains the environment needed to execute that function, along with the function in question. The resulting container is stored in a registry that is accessible to the Faas infrastructure. Equally important, a corresponding entrance is created in the API gateway that will take charge of channeling the request (generally, HTTP REST) and start up the container or containers (depending on the concurrence we have configured) that house the function that should process that request. If the container of the function is not available, the container may destroy itself immediately, or after a defined period.

The time need for a function to be ready to begin its execution is several hundreds of milliseconds, depending on the technology used. This valuable latency time can be reduced through the so-called hot functions, which keep the instance in execution to avoid the overhead of the container startup, considerably improving the throughput in the case of high demand for the service in question.

The current serverless infrastructures use RPC to delegate the execution of a REST API Gateway over a group of services.

State of the art and rollout options in the public cloud

The principal providers of the public cloud offer their version of FaaS as a product with its own APIs and technologies:

We encourage you to send us your feedback to BBVA-Labs@bbva.com and, if you are a BBVAer, please join us sending your proposals to be published.

The most extensive case currently of the use of serverless architectures is the use of FaaS as the service of a provider of public cloud, such as Amazon Lambda, Google Cloud Functions or Microsoft Azure Functions. In this case, the service is managed and served by the provider.

In the case of AWS Lambda, very few internal details of its architecture have been made public. According to the official documentation, it uses a container model although it does not mention anything about the orchestrator it uses internally.

In Google Cloud Functions, the functions are executed in native environments with Node.js. There is an emulator to test the functions in a local environment, CI/CD, before publishing them.

Azure Functions, in spite seeming to be a similar service, has a radically different implementation. Azure Functions are executed on Azure WebJobs. Microsoft has published its execution environment in GitHub.

State of the art and rollout options on private cloud

For on-premises options, there are different options depending on whether the rollout takes place on baremetal, on an IaaS such as OpenStack or a PaaS like Kuernetes or Mesos:

- Iron Functions (on own infrastructure, or COEs such as Kubernetes, or DC/OS)

- OpenStack Picasso

- Fission (Kubernetes only)

When implementing a serverless on-premises architecture, it can be deployed just like any other application or be integrated like a service offered by IaaS or a PaaS. Iron Functions is an example of the former case, where the user rolls out FaaS on physical servers or on the private space of a cloud provider: a tenant of OpenStack, a VPC of AWS, etc.

In the second case, integration is sought between the infrastructure service, taking advantage of the integration of the functionalities of multi-tenancy, user control, etc. that the software of IaaS or PaaS provides. The OpenStack Picasso project is an attempt to integrate Iron Functions as another service of OpenStack, while Fission implements FaaS on Kubernetes Both implement an FaaS, but with a totally different approach.

OpenStack Picasso deploys Iron Functions in the infrastructure, and adds to it the services of user control of OpenStack Keystone. However, OpenStack has no control over the infrastructure that executes the functions: both worlds run independently. Iron Functions acts in a manner external to OpenStack.As a result, the concept of quotas does not exist, or control of compute nodes, or multi-tenancy for the FaaS containers. It only allows the creation of private functions that are visible solely to the users of a tenant of OpenStack.

Both Fission and Iron Functions use Docker containers to house and execute the lambda function.

The case of the rollout of FaaS on Kubernetes is probably the most interesting one, as it allows us to unite, in a simple fashion, traditional microservices with the ones implemented using serverless. All this is done in an integrated manner in Kubernetes, using the services that a cluster of Kubernetes provides, such as monitorization, the aggregation of logs and the publication of services.

Cold start-up time and hot functions

Iron Functions creates a container for each execution, The average start time or a container is around 200-300ms. In the majority of cases, this time is long to offer acceptable latencies for an HTTP request. Here is where the so-called Iron Hot Functions/Containers come into play. This type of function makes it possible to keep the containers started up, while they are receiving requests and as a result, avoiding the cold startup time for second and subsequent requests.

Hot functions make it possible to keep the containers started up, while they are receiving requests and as a result, avoiding the cold startup time for second and subsequent requests.

Little is known about the implementation of this mechanism of Hot Functions in Iron Functions, since the documentation from Iron only mentions that it uses the standard entry and exit system used to operate Hot Containers. In addition, the Hot Function of Iron requires a specific code to function, because they internally modify the event that the function sends.

In the case of Fission, there is a pool of containers that execute the functions and are permanently waiting for an event, so that the cold startup time is much lower (~100msec), at the cost of consuming those resources even when there are no petitions.

Laboratory: We test serverless with AWS, IronFunctions and Fission

We carried out our own battery of tests to compare the performance of each of the technologies. All the tests except AWS Lambda have been performed on the same PC, with the following specifications:

- CPU: Intel i5-4310M CPU @ 2.70GHz

- RAM: 8GiB DDR3 @ 1600MHz

- HDD: 320 GB @ 7200RPM

The tests have been made with Siege. The test consists of stressing, for 10 seconds, the endpoints of the functions, with a concurrence of 25 petitions.

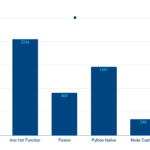

Figure 1. Petitions per second in the different platforms analyzed.(The greater the number of petitions per second, the better)

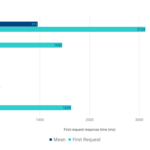

Figure 2. Response time for the first request, for each platform analyzed. The less latency, the better. We also indicate the time of the «cold» startup.

Immediate business value

Each new paradigm change should bring some intrinsic benefits that justify the adoption of the new model. In the case of serverless, we find some of these benefits immediately:

- Reduction of the times for development and time-to-market: the contract with the infrastructure permits the developer to concentrate his/her efforts on the development of the functionality, minimizing the time devoted to scalability and to the execution environment.

- Resource optimization. Without devoting time to the scalability and dimensioning of our application, we achieve a complete optimization at all levels:

- Complete architecture: it is not necessary to dimension and assign a machine to a microservice. As a result, there will be no unused resources, as occurs with erroneous deployments and/or overdimensioning. This supposes immediate savings in rollouts in public clouds that are inactive for long periods of time.

- Automatic scaling by function: when there is a peak of demand for one or various functions, they will scale independently, optimizing the resources available to the functions that really need them.

- Improvements in current architecture, based on the massive processing of information (batch, ETLs, etc.). In these case, the use of latencies are not so relevant, but they allow us to evolve from the traditional batch architectures, which are based on files and Job Control Languages (JCL) to a more stream-oriented model.

A bet on the future

The industry is rapidly adapting to serverless architecture. Success stories such as Pokemon Go or SnapChat, in which a massive demand did not suppose a problem for the scalability of the service, vouch for the architecture. In the near future, we will find some changes that will make Lambda serverless an appropriate architecture for the majority of the cases.

From the developer´s point of view, serverless continues to have peculiarities to which one must adapt, such as its stateless nature. However, the problems are much smaller than those derived from the rollout of a traditional architecture.

Function triggers

The serverless infrastructures are based on the HTTP protocol, which makes them more adaptable. However, in serverless architecture the communication protocol is one more piece, which will make it possible to change the protocol and adapt it to the needs of each case.

It will also allow for the creation of small pieces of code to user our architecture with other data sources beyond HTTP; for example, Kafka or infrastructure triggers (turning off a machine, the intensive use of a network resources, a metric of a specific business).

In this regard, Amazon Lambda leads the market, offering a transparent integration with all its products, being able to set off functions given events in S3 or SQS. Fission also responds to some specific events of Kubernetes.

Composition of functions

In the future, the possibility of combining functions will be attained. This will allow for the definition of business pipelines. Amazon step functions is already beginning to explore this possibility. These pipelines allow for a technological collaboration without precedent among persons with a diverse set of abilities, reducing contracting costs as (they) will not have to concentrate on the development of a specific technology.

In traditional architectures, programmers should be aware of the auxiliary components to the specific business function, such as security, log, etc., in order to create a complete service. In pure lambda architecture, these security and control mechanisms can be created as part of the infrastructure and chained to the specific business function. The Lambda systems that permit composition make it possible to enable auxiliary of complementary mechanisms with the developer who implements a specific function having to know them. This, once again, allows the programmer to concentrate on the value that his code will add; it also reduces the amount of experience needed to put a service into production. In addition, as the auxiliary functions will be integrated into the platform, it is not possible to bypass this regulation, which gives security to the system and minimizes possible risks derived from its incorrect use.

By nature, the lambda architectures work in streaming, which allows for the use of advanced techniques to redirect traffic and test solutions. Some well-known techniques that can be carried out are the following:

- A / B testing

- Canary Release

- Parallel testing

The reduction of particularities for each case allows for focusing on what to develop, rather than how to develop it, reducing the Time to Market and also the risk of errors in production environments.

Scheduling and optimization in the localization of resources also has a long way to go. The use of serverless architecture will make it possible to better predict how to scale the functions that comprise a service independently, instead of attempting to predict a complete service.

Conclusions

Lambda serverless architectures allow for an evolution of the composition of services in an atomic (lambda functions) and scalable (serverless) manner. All this translates into real business applications through:

- Reduction of the costs of implementation and operation, thanks to the self-scaling that lambda architectures offer, by definition.

- Simplification of the maintenance and evolution of the application thanks to atomizing of functions.

- Reduction of development times: an improvement in time-to-market.

- An increase in security, thanks to the possibility of implementing them in a form that is external to the principal lambda form.

- An increase in reliability.

Currently, the lambda severless architectures are limited as regards the functionality that we can implement in them. There is still a long way to go in language support, internal refinement of the architecture and the use of APIs other than REST, for it to be an architectonic alternative to any application.

In the private cloud, the most interesting technological proposal for their application today could be that of Fission.io, which works on the PaaS platform that is most used today: Kubernetes. We believe that the focus of basing Fission over a preexisting PaaS allows one to center the efforts of development on the core of the application (differential value), as opposed to other approaches that construct the solution from zero (IronFunctions). In addition, it opens the door to deployments in hybrid clouds: GKE is the solution of Google Compute Platform for the rollout of containers in the public cloud.

As regards services in the public cloud, the vendor lock-in which some of the environments of Amazon, Google o Microsoft provide is a disadvantage that discourages its adoption. In the services analyzed, the newness and lack of standardization suppose a barrier to their adoption, for the moment.

However there are enough compelling reasons and success stories in production to consider this technology (apart from the design methodology and associated development) as one of the most important trends for the successful deployment of self-scaling applications, and with an impressively low time to market. In fact, it is already being used in critical tasks such as monitoring of the financial markets in the United States by Finra, or in success cases such as those already mentioned, Pokémon Go y SnapChat.

For all those reasons, it is necessary to familiarize oneself with this technology as it evolves, preparing and selecting applications that can be developed on this paradigm. This knowledge will allow us, in the future, to have a competitive advantage over more conservative architectures, especially when facing the need for massive scalability.

We encourage you to send us your feedback to BBVA-Labs@bbva.com and, if you are a BBVAer, please join us sending your proposals to be published.

References

- Serverless, by Martin Fowler.

- A very complete recopilation of links.

- AWS Lambda.

- Google Cloud Functions.

- Azure Functions.

- IBM OpenWhisk.

- Iron.io: complete serverless plattform

- Fission.io: serverless over Kubernetes.

- Sparta: A framework written in Go for AWS Lambda.

- Apex: Another framework for AWS Lambda.

- Hyper.sh functions: another FaaS in plublic cloud.