Secret management using Docker containers

Using Docker in the deployment of software in productive systems solves many problems related to agility and the normalization of these processes. But, like all technology that breaks with prior IT processes, it generates new challenges or requires different solutions for persisting problems. One of those is the management of secrets.

What is a secret?

A secret is a piece of information that is required for authentication, authorization, encryption and other tasks. It should be confidential and it is indispensable - for example, so that users and systems can connect to one another in a secure manner.

A traditional solution

When containers are not used, software and its configuration are deployed in systems with a long lifecycle, which could even last years. This means that in many cases, it is decided to introduce the secrets in the system once, and they remain in a filesystem for years (at times, for many years).

There are probably countless systems in the IT ecosystem with service users that are configured with the same credentials, without an expiration date, that were generated when the system was implemented.

This is, unfortunately, a quite common case, because of the operational effort required to renovate secrets. It is also not secure: a breach in a system with stored credentials allows those credentials to be used for an unlimited time. Additionally, in the case of detection of a possible intrusion, renovating the compromised secrets turns into an arduous task, due to the simple fact that there may not be procedures for doing so, since it is not a task that is commonly performed.

Of course, for traditional systems, there are secure methods that allow the renovation of secrets, and their secure use by systems/software. For example, the so-called “Privileged Accounts Managers” whose brief is to take care of renovating secrets in systems with long lifecycles, and who have clients that allow to consult those secrets in runtime. These solutions, if they do work for this type of nearly perpetual systems, are difficult to adapt to the world of containers.

The use of secrets in containers

Solutions from the legacy world for managing secrets are generally based on having them stored in the system permanently, or having software that takes care of asking third parties for those secrets. If we analyze the problem of generating images with stored secrets, we observe the following issues:

- The image of the container should be immutable. That is, the images are created once, and that same image should run in any environment. That said, it’s clear that if the image is created with credentials saved directly in its definition, we have two possibilities:

- either we have to share those credentials in multiple environments, and we will be incurring in serious problems of confidentiality and segmentation of privileges;

- or we have to create different images for each environment, ignoring the immutable nature that the images should have.

- The solution consists of requesting secrets in runtime by means of some piece of software included in the image, or sidekick images solves the former problem, since the image is immutable and does not contain sensitive information. However, this fact is, in and of itself, a problem. If the image does not contain sensitive information, can the secrets be consulted without performing a prior authentication procedure? If I have to perform that procedure, what mechanism should I use to do so? Is it possible to do it without secrets?

Herein lies the challenge in finding solutions for the management of secrets in containers.

Our requirements

When we face the challenge of secure management of security, we place the following requirements on ourselves:

- The system that supplies the certificates should run on any Docker platform, regardless of the orchestrator used, or the cloud. For this reason, we couldn’t use the technical methods only used in orchestrators such as Kubernetes, Rancher or OpenShift, or the proprietary services of public clouds, such as those offered by AWS and GCE.

- Use of short-term certificates for the authentication of microservices. In order to minimize the impact in a case of theft of credentials, the use of short term certificates is required for the authentication of our services.

- Certificates should be generated automatically in each startup of the containers. Because they are short-term certificates, valid certificates should be generated for each connection.

- The certificates would be generated by the service and by the environment, not by the container itself. In this case, we define service as a group of containers that perform a specific task. If we have a service called “timeline,” a valid certificate would be generated for each container in the “timeline” service.

- Developers should not access credentials in productive environments. That is, the system that supplies the credentials has to identify the containers, without the developers who create the images for the containers having access to sensitive information.

Our Proposal

Our solution consists of two fundamental pillars.

- Vault: A software developed by Hasicorp for the dynamic management of secrets. It can integrate third party systems, create/erase access credentials, and provide them to the user by means of an API REST. It also has an integrated PKI functionality.

- Our own piece of software (let’s call it “injector”) written in Golang, whose aim is to make requests to Vault for the issuance of short-term certificates and inject the appropriate certificates into the containers. The software can be found at: https://github.com/BBVA/security-vault

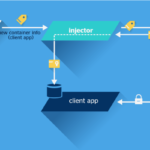

The injector

To perform this task, the process that is executed in each host of the platform (as a standalone process or as a container) is connected to the Docker socket and subscribes to the shutdown and startup events of the containers. These events contain information that allows the container to be identified. Labels are the methods used by Docker to assign metadata to images and/or to containers or other Docker objects.

For example, we can use labels to identify a container as an application in the production environment that executes the “timeline” application in the following manner:

-

environment=production app=timeline

Labels can be configured at the time the image is created or when the container is run. The moment and the way in which the labels are assigned are important, since they identify the container. Based upon this identification, a certificate will be assigned to them for a specific use. It is clear that the system that assigns these labels should be audited and correctly protected, since an incorrect assignment of labels can result in allowing access to certificates with more privileges than are deserved.

Generally, these labels should be managed by the orchestrator that launches the containers. These orchestrators already introduce several labels that can help to identify the specific environment and the service to which the container belongs to. However, the labels can be configured by other actors and can even be configured in the image itself before reaching the orchestrator. All this, as long as methods are used to ensure that the labels have not been altered by third parties. See Docker notary as a tool to guarantee the integrity of the images.

To generate the certificates, the injector authenticates to Vault, using a previously configured token that allows it to make requests to the PKI module for the domains required in the specific environment (for example: timeline.production.example.io) and for the production environment.

Vault has several authentication mechanisms, with the use of tokens being the simplest. The tokens are generally associated with a role and they expire. This token should be supplied to the injector during its configuration, and its generation and renovation should be automatic when deploying the Docker host or, using mechanisms supplied by a cloud orchestrator or cloud provider like Rancher’s secrets-bridge.

Once the certificate has been obtained, the injector will introduce it into a directory of the container’s filesystem. This directory can be the root filesystem of the container, or a volume defined for this task. It is recommended that it be a volume in memory, to ensure that that there are no certificates written to disk and that their storage is the most volatile possible

The process flow is as follows:

- When a container is deployed on a host, the injector will receive an event from Docker, indicating that a container has started up, along with the labels of this container.

- The injector will use the identification label to construct a valid certificate and to make a request to Vault via HTTP REST, for it to generate a short-term certificate.

- The injector will introduce, in the route specified in its configuration, the public key, the private key, and the certificate of the Certification Authority (CA) that has signed the certificate. A process executed in the container should process these files and adapt their format to the one needed by the software. For example, if the application is Java, a keystore and a truststore should be generated.

- Lastly, the main application of the container will be executed and will be connected to the required service in a secure fashion, using the recently created certificate.

We encourage you to send us your feedback to BBVA-Labs@bbva.com and, if you are a BBVAer, please join us sending your proposals to be published.